Installation Instructions

Prerequisites

1. Setup Kubernetes or OpenShift cluster

|

Supported version of Kubernetes: 1.21 and later. We recommend AWS (EKS) or Google Cloud (GKE), but you can install it on a standalone cluster as well. |

Define your cluster size considering the following minimum requirements and your business needs:

-

1. Minimal requirements for the Catalyst Blockchain Manager Canton service for 1 instance with:

-

2 core CPU

-

4GB RAM

-

10GB disk space

-

-

2. Each node (Domain, participant, or application) that will be deployed consumes additional resources. Minimal requirements for one node:

Node |

CPUe |

Memory, Gi |

Storage, Gi |

Domain |

1 |

1 |

1 |

Participant |

1 |

1 |

1 |

Application |

1 |

1 |

1 |

| Deciding on the size of the cluster, please consider the expected load of the nodes and increase these values accordingly. |

2. Install Helm to your workstation

Installation manuals: helm.sh/docs/intro/install/

No customization is needed.

| Supported version of Helm: 3.*. |

3. Install Traefik ingress

The ingress-controller is needed for traffic routing to expose nodes (domains & applications). The Catalyst Blockchain Manager Canton service creates a CRD resource (IngressRoute in case of using Traefik), that is automatically started and deleted along with each application (and on demand for domains).

Installation manuals: github.com/traefik/traefik-helm-chart

No customization is needed, the default port ( :443 ) for HTTPS traffic will be used.

| We recommend installing Traefik to a separate namespace from the application (creation of a namespace for the Catalyst Blockchain Manager Canton service is described in step 6). |

| Supported version of Traefik: 2.3. |

4. Create an A-record in a zone in your domain’s DNS management panel and assign it to the load balancer created upon Traefik or OpenShift installation

Catalyst Blockchain Manager Canton service needs a wildcard record *.<domain> to expose nodes. All created nodes (domains, participants, applications) will have a <NodeName>.<domainName> address.

For example, in case you are using AWS, follow these steps:

-

Go to the Route53 service.

-

Create a new domain or choose the existing domain.

-

Create an A record.

-

Switch “alias” to ON.

-

In the “Route traffic to” field select “Alias to application and classic load balancer.”

-

Select your region (where the cluster is installed).

-

Select an ELB balancer from the drop-down list.*

*Choose the ELB balancer, which was automatically configured upon the Traefik chart installation as described in step 3 (or upon OpenShift installation in case of using OpenShift). You can check the ELB by the following command:

kubectl get svc -n ${ingress-namespace}where ${ingress-namespace} — the name of the namespace, where the ingress was installed.

ELB is displayed in the _EXTERNAL-IP field.

5. Create a namespace for the Catalyst Blockchain Manager Canton service application

kubectl create ns ${ns_name}where ${ns_name} — name of namespace (can be any).

5.1 Get the credentials to the Helm repository in the JFrog artifactory provided by the IntellectEU admin team

5.2 Add the repo to Helm with the username and password provided:

helm repo add catbp <https://intellecteu.jfrog.io/artifactory/catbp-helm> --username ${ARTIFACTORY_USERNAME} --password ${ARTIFACTORY_PASSWORD}As a result: "catbp" has been added to your repositories

6. Create an ImagePullSecret to access the Catalyst Blockchain Manager Canton service deployable images

For example, create this Secret, naming it intellecteu-jfrog-access:

kubectl create secret intellecteu-jfrog-access regcred --docker-server=intellecteu-catbp-docker.jfrog.io --docker-username=${your-name} --docker-password=${your-password} --docker-email=${your-email} -n ${ns_name}where:

-

_

${your-name} _- your Docker username. -

_

${your-password} _— your Docker password. -

${your-email}— your Docker email. -

${ns_name}— the namespace created for the Catalyst Blockchain Manager Canton service on the previous step.

|

In case you want to use a readiness check and use a private repository for the image, you should create a “secret” file with your credentials in Kubernetes for further specifying it in the Helm chart upon Catalyst Blockchain Manager installation. Please refer to the official Kubernetes documentation: kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/ Helm chart configuration instructions you will find here. |

7. Setup Keycloak realm

Import the realm.json file to create necessary clients, scopes & users in your keycloak realm or create a new realm and

-

Create public client for canton-console-ui (set ID in helm values).

-

Create confidential client for canton-console (api) (set id and secret in helm values).

-

Grant following realm-admin client roles to the dedicated service account for canton-console client:

create-client,manage-clients,query-clients,view-clients&query-users -

Create

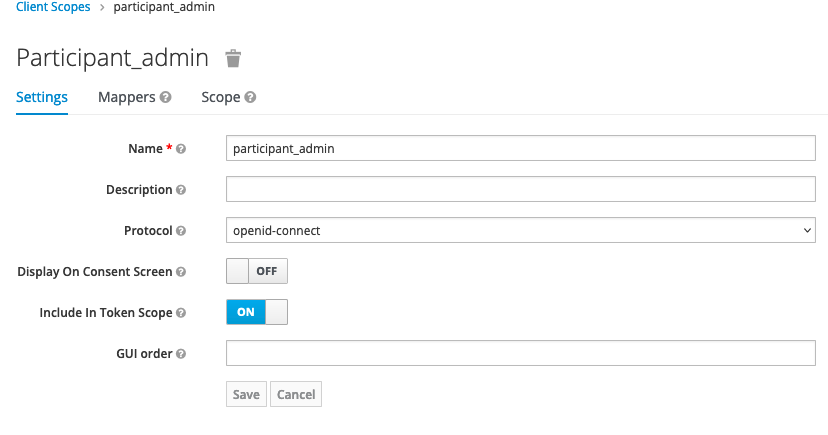

participant_adminclient scope and assign to canton-console client. Figure 1. Participant Admin Scope

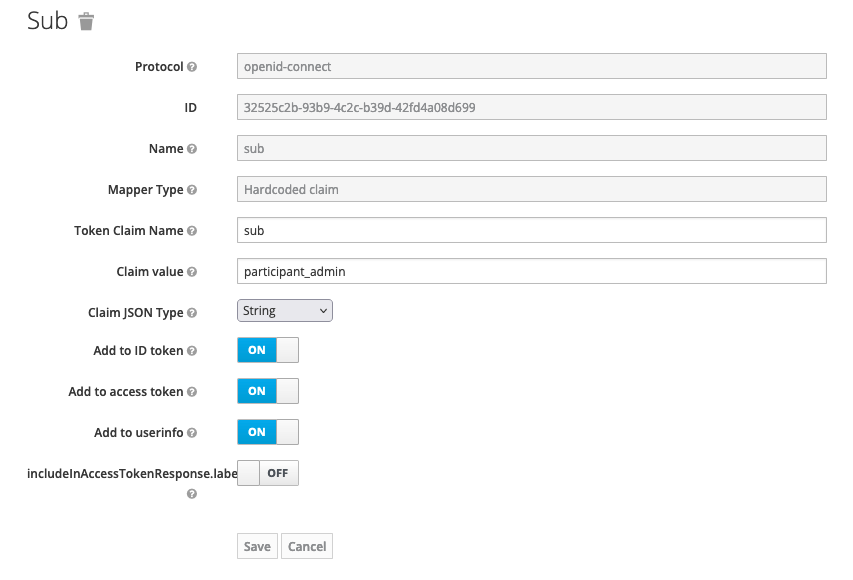

Figure 1. Participant Admin Scope Figure 2. Participant Admin Scope Mapper

Figure 2. Participant Admin Scope Mapper -

Create

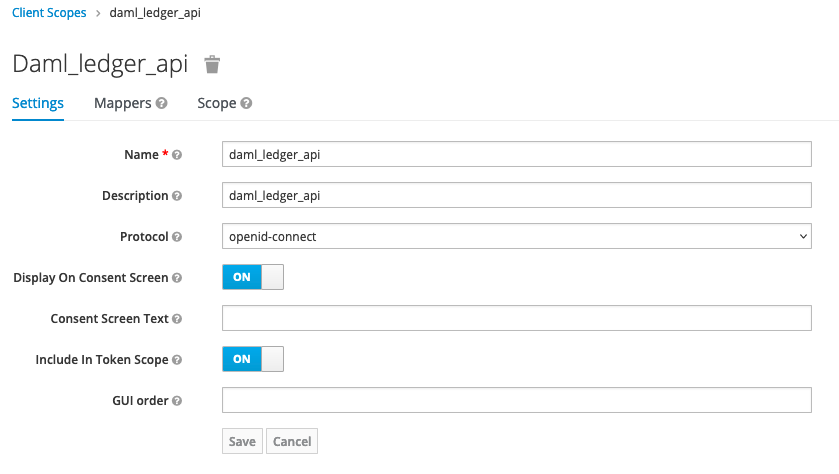

daml_ledger_apiclient scope and assign to canton-console client. Figure 3. Daml Ledger Api Scope

Figure 3. Daml Ledger Api Scope

-

-

Create confidential client for canton-console-operator (set id secret in helm values)

-

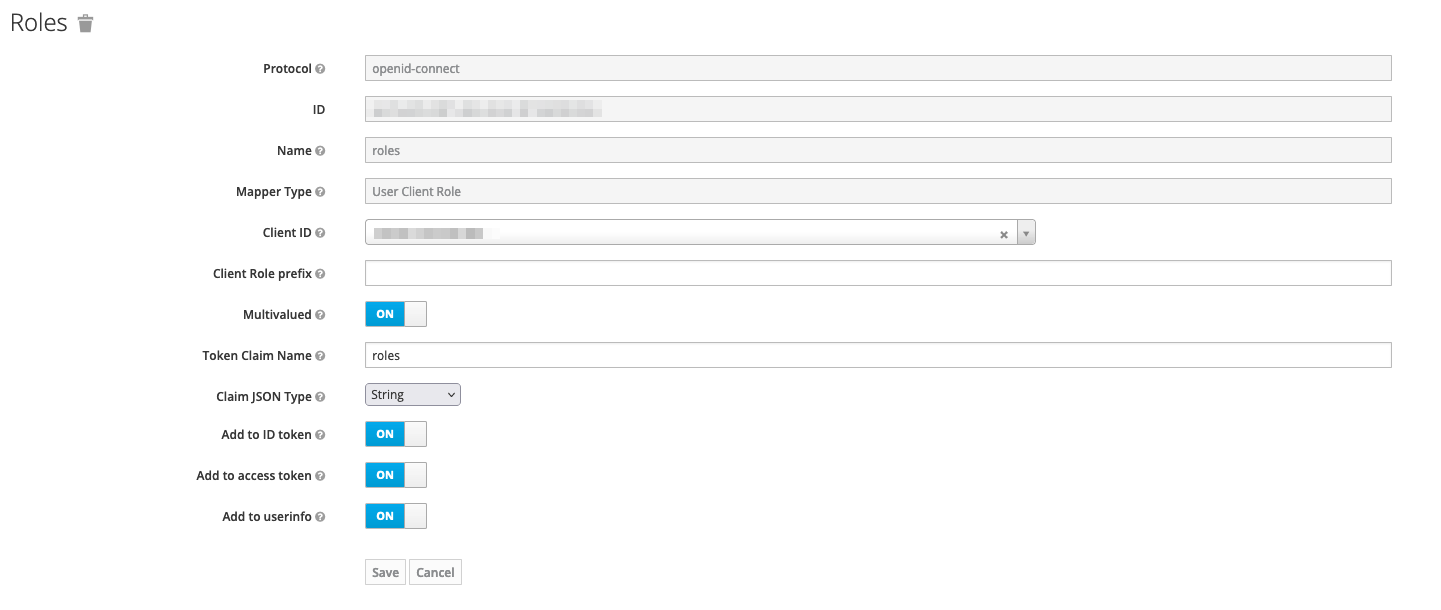

Add following mapper to ALL created clients to map user roles to correct claim (make sure to select correct client id for each):

|

User roles |

|

After creating realm, set url and realm name in helm values. |

8. Setup Monitoring

The installation of the Catalyst Blockchain Manager Canton service includes templates to assist monitoring. To observe metrics of all nodes, install the kube-prometheus-stack on your cluster.

Setup

Configure helm chart values

The full list of the helm chart values

# -- address where application will be hosted.

domainName: ""

auth:

enabled: true

keycloakUrl: ""

keycloakRealm: ""

rbac:

# -- Whether to create RBAC Resourses (Role, SA, RoleBinding)

enabled: true

# -- Service Account Name to use for api, ui, operator

serviceAccountName: canton-console

# -- Automount API credentials for a Service Account.

automountServiceAccountToken: false

# operator component values

operator:

# -- number of operator pods to run

replicaCount: 1

# -- operator image settings

image:

repository: intellecteu-catbp-docker.jfrog.io/catbp/canton/canton-operator

pullPolicy: IfNotPresent

# defaults to appVersion

tag: ""

# -- operator image pull secrets

imagePullSecrets:

- name: intellecteu-jfrog-access

# -- extra env variables for operator pods

extraEnv: {}

# -- labels for operator pods

labels: {}

# -- annotations for operator pods

podAnnotations: {}

# -- Automount API credentials for a Service Account.

automountServiceAccountToken: true

# -- security context on a pod level

podSecurityContext:

# runAsNonRoot: true

# runAsUser: 4444

# runAsGroup: 5555

# fsGroup: 4444

# -- security context on a container level

securityContext: {}

# Define update strategy for Operator pods

updateStrategy: {}

# -- CPU and Memory requests and limits

resources: {}

# requests:

# cpu: "200m"

# memory: "500Mi"

# limits:

# cpu: "500m"

# memory: "700Mi"

# -- Specify Node Labels to place operator pods on

nodeSelector: {}

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

tolerations: []

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity

affinity: {}

# -- keycloak client secret is used to get token from keycloak (set in env-specific values)

keycloakClient:

secret: ""

# API component values

api:

# -- the persistence volume claim for DARs

darsPvc:

enabled: true

size: 5Gi

mountPath: /dars-storage

# If defined, storageClassName: <storageClass>

# If undefined, no storageClassName spec is set, choosing the default provisioner. (gp2 on AWS, standard on GKE, AWS & OpenStack)

storageClass: ""

# Annotations for darsPvc

# Example:

# annotations:

# example.io/disk-volume-type: SSD

annotations: {}

# -- environment for api pods

environment: ""

# -- gateway configuration for channel subscription gateway

gateway:

events:

heartbeat:

enabled: false

interval: 60 # interval in seconds

# -- number of api pods to run

replicaCount: 1

# -- api image settings

image:

repository: intellecteu-catbp-docker.jfrog.io/catbp/canton/canton-console

pullPolicy: IfNotPresent

# defaults to appVersion

tag: ""

# -- api image pull secrets

imagePullSecrets:

- name: intellecteu-jfrog-access

# -- extra env variables for api pods

extraEnv: {}

# -- labels for api pods

labels: {}

# -- api service port and name

service:

port: 8080

portName: http

# -- annotations for api pods

podAnnotations: {}

# -- Automount API credentials for a Service Account.

automountServiceAccountToken: true

# -- securtiry context on a pod level

podSecurityContext:

# runAsNonRoot: true

# runAsUser: 4444

# runAsGroup: 5555

# fsGroup: 4444

# -- security context on a container level

securityContext: {}

# Define update strategy for API pods

updateStrategy: {}

# type: RollingUpdate

# rollingUpdate:

# maxUnavailable: 0

# maxSurge: 1

# -- CPU and Memory requests and limits

resources: {}

# requests:

# cpu: "200m"

# memory: "900Mi"

# limits:

# cpu: "500m"

# memory: "1000Mi"

# -- Specify Node Labels to place api pods on

nodeSelector: {}

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

tolerations: []

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity

affinity: {}

# -- keycloak client secret is used to get token from keycloak (set in env-specific values)

keycloakClient:

secret: ""

# UI component values

ui:

# -- gateway configuration for channel subscription gateway

gateway:

events:

heartbeat:

enabled: false

interval: 60 # interval in seconds

# -- ui autoscaling settings

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 5

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

# -- number of ui pods to run

replicaCount: 1

# -- api image settings

image:

repository: intellecteu-catbp-docker.jfrog.io/catbp/canton/canton-console-ui

pullPolicy: IfNotPresent

# defaults to appVersion

tag: ""

# -- api image pull secrets

imagePullSecrets:

- name: intellecteu-jfrog-access

# -- extra env variables for ui pods

extraEnv: {}

# -- labels for ui pods

labels: {}

# -- api service port and name

service:

port: 80

portName: http

# -- annotations for api pods

podAnnotations: {}

# -- Automount API credentials for a Service Account.

automountServiceAccountToken: true

# -- securtiry context on a pod level

podSecurityContext:

# runAsNonRoot: true

# runAsUser: 4444

# runAsGroup: 5555

# fsGroup: 4444

# -- security context on a container level

securityContext: {}

# Define update strategy for UI pods

updateStrategy: {}

# -- CPU and Memory requests and limits

resources: {}

# requests:

# cpu: "100m"

# memory: "50Mi"

# limits:

# cpu: "200m"

# memory: "200Mi"

# -- Specify Node Labels to place api pods on

nodeSelector: {}

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

tolerations: []

# -- https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity

affinity: {}

# -- metrics server and Prometheus Operator configuration

# -- keycloak client id is used to get token from keycloak (set in env-specific values)

keycloakClient:

id: ""

# -- Ingress for any ingress controller.

ingressConfig:

provider:

# -- #Currently supported: [traefik, traefikCRD]

name: traefikCRD

traefik:

ingressClass: ""

traefikCRD:

tlsStore:

enabled: false

name: default

# -- specify whether to create Ingres resources for API and UI

enabled: false

tls:

enabled: false

# -- Certificate and Issuer will be created with Cert-Manager. Names will be autogenerated.

# if `certManager.enabled` `ingressConfig.tls.secretName` will be ignored

certManager:

enabled: false

email: "your-email@example.com"

server: "https://acme-staging-v02.api.letsencrypt.org/directory"

# -- secret name with own tls certificate to use with ingress

secretName: ""

# -- Whether to parse and send logs to centralised storage

# FluentD Output Configuration. Fluentd aggregates and parses logs

# FluentD is a part of Logging Operator. CRs `Output` and `Flow`s will be created

logOutput:

# -- This section defines elasticSearch specific configuration

elasticSearch:

enabled: false

# -- The hostname of your Elasticsearch node

host: ""

# -- The port number of your Elasticsearch node

port: 443

# -- The index name to write events

index_name: ""

# -- Data stream configuration

data_stream:

enabled: false

name: ""

data_stream_template_name: ""

# -- The login username to connect to the Elasticsearch node

user: ""

# -- Specify secure password with Kubernetes secret

secret:

create: false

password: ""

annotations: {}Install the Catalyst Blockchain Manager Canton service

Use the following command:

helm upgrade --install ${canton_release_name} catbp/canton-console --values values.yaml -n ${ns_name}where:

-

${fcanton_release_name} _— name of the Catalyst Blockchain Manager Canton service release. You can choose any name/alias. It is used to address for updating, deleting the Helm chart. *catbp/canton-console_— chart name, where “catbp” is a repository name, “canton-console” is the chart name. -

values.yaml— a values file. -

${ns_name}— name of the namespace you’ve created before

You can check the status of the installation by using these commands:

-

helm ls— check the "status" field of the installed chart.

| Status “deployed” should be shown. |

-

kubectl get pods— get the status of applications separately.

| All pods statuses must be “running.” |

-

kubectl describe pod $pod_name— get detailed information about pods.